AI can either amplify or erase cultural diversity. At BlackWeek’s “Diverse Data Matters – DE&AI” session, industry leaders revealed how inclusive data is key to shaping advertising’s future.

With AI, everyone’s talking about efficiency, scale, and optimization. But at BlackWeek’s “Diverse Data Matters – DE&AI” session, the conversation took a turn — toward the very real blind spots lurking in AI’s algorithms.

Sure, AI can streamline creative processes and power personalized ad experiences, but who’s making sure those experiences reflect the diversity of the real world? If we’re not careful, AI will simply reinforce existing biases, leaving marginalized communities out in the cold.

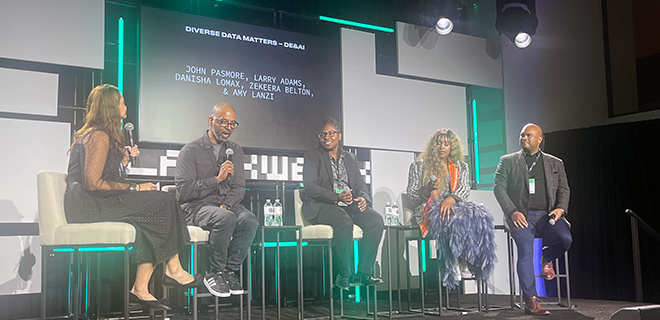

Moderated by Amy Lanzi (CEO, Digitas North America), the session brought together industry heavyweights Larry Adams (XStereotype), John Pasmore (Latimer), Danisha Lomax (Digitas), and Zekeera Belton (Collage Group) to unpack the critical issue of diversity in AI.

The consensus? AI is only as good as the data it’s trained on, and right now, that data isn’t doing enough to reflect the full spectrum of human experience.

AI Is Nothing Without Diverse Data

Let’s face it, AI doesn’t magically pull insights out of thin air. “AI’s generative output is only as strong as the data powering it,” shared Adams. But, the problem starts with the data feeding most AI systems.

The data often produces shallow, surface-level insights, without the cultural depth to reflect complex human experiences. For Adams, the founder of XStereotype, this is unacceptable. “We need to ensure that the connection points AI makes reflect diverse human experiences, not just some generic output,” he said.

That’s why he’s working to solve the problem. XStereotype’s mission is to fill AI’s knowledge gaps by feeding it richer, more authentic data signals. “Data is the new advocacy,” he explained. AI’s power isn’t just in automation — it’s in its potential to amplify the voices of underrepresented communities. But that potential is still largely untapped.

“A lot of today’s intelligence? I wouldn’t trust it,” Adams added. There’s great risk associated with blindly trusting AI to get things right without questioning its sources.

Filling the Cultural Blind Spots

The idea that AI has cultural blind spots isn’t new, but Pasmore, founder of Latimer, explained just how deep those gaps go. Much of Black and Brown history remains completely inaccessible to AI systems. “There’s a tremendous wealth of cultural data that’s simply not being tapped into,” he said. “We’ve spoken to major Black universities with millions of unscanned pages of historical documents — none of that is feeding into the AI models being used today.”

Pasmore’s company, Latimer, is tackling that issue head-on by building a retrieval-augmented generation (RAG) model to incorporate more diverse data into AI systems. Think of it as an alternative to mainstream models like ChatGPT, prioritizing factual data from underrepresented communities, such as archives from The Amsterdam News and dissertations from Black scholars.

Without these rich sources, AI will be limited. AI is only as “intelligent” as the information it’s fed, and if that information leaves out entire cultures, the results will be predictably skewed.

Humans Still in the Driver’s Seat — For Now

Despite the power of AI, Zekeera Belton, Vice President of Customer Success & Cultural Strategist at Collage Group reminded the audience that human oversight is still critical — especially when it comes to cultural representation. “AI is a tool to enhance our work, not replace it,” she explained. AI can speed up processes, but without human eyes to validate the outputs, there’s a real risk that it could miss key cultural nuances or even reinforce harmful stereotypes.

It’s not enough to gather surface-level insights — Belton stressed that cultural fluency should be at the heart of any AI-driven strategy. Brands should dive deeper, examining things like multi-generational family dynamics within Hispanic communities or the nuances of Black consumer behavior.

“You can’t just say ‘Latinos love their families’ and leave it at that,” Belton said. “It’s about understanding the layers of cultural specificity.” These are the nuances AI models can’t yet grasp without diverse, high-quality data, and that’s where human intervention still matters most.

The Challenge of Scale: Does Bigger Mean Better?

While AI has the potential to scale inclusivity, Danisha Lomax, EVP, Head of Client Inclusivity & Impact at Digitas, challenged the industry’s obsession with size.

“There’s this idea that everything has to be scalable, but I’m calling BS,” she said. In a world dominated by the notion that bigger is better, Lomax argued that culturally relevant insights don’t need to be quantified by millions of data points to be valid. The real issue is authenticity — ensuring AI’s outputs truly resonate with diverse audiences, even if that means working with smaller, more targeted data sets.

“We don’t need millions of data points to know something is culturally significant,” she said. Reaching scale shouldn’t come at the expense of cultural relevance — the challenge is ensuring that the data fed into AI systems reflects the real, lived experiences of diverse communities. Otherwise, AI will continue to perpetuate the same biases that have historically sidelined these groups.

The Road Ahead: AI and the Future of Diversity

So where do we go from here? According to Adams, the future of advertising will increasingly rely on decision trees — automated systems that make real-time decisions based on data inputs. But there’s a caveat. “We’re trusting out-of-the-box AI to make decisions before we let people of color make those decisions,” Adams warned. Without diverse data sets feeding these decision trees, the results could leave marginalized voices out of the conversation altogether.

Pasmore agreed, adding that while AI has the potential to emulate human decision-making, we’re still in the “first inning” of this technology. “At some point, AI will be indistinguishable from human responses,” he said. “But we have to stay on our toes and ensure it’s being used responsibly.”

The future may be automated, but if we’re not intentionally feeding diverse, inclusive data into these systems, we’ll just end up automating more of the same problems.

Moving Beyond the Bias

The session’s message was clear: It’s time for brands, agencies, and tech companies to take responsibility for the data they use to power AI.

“We can’t become servants to the technology,” Adams reminded us. “We have to tell it what to do.” That means advocating for more diverse data sets, creating frameworks that prioritize cultural insights, and holding AI accountable for the outputs it generates.

AI can revolutionize advertising and marketing, but only if it’s built on a foundation of inclusivity. As Pasmore pointed out, “If the data isn’t there, AI can’t help you.”